Your voice is music to my ears

A specialized tool for identifying pitch categories in speech and generating an accurate bass line for a speaker from low-quality audio samples.

The tool, named “Garfunkel,” is based on a convolutional algorithm and demonstrates higher accuracy compared to the MIR Toolbox in MATLAB.

It will soon be available to researchers under a standard MIT license.

Goal

Researchers

What we did

Results

Forthcoming

Urban autonomy in a neo-nationalist age: The Case Study of Tel-Aviv-Jaffa

This research stems from a paradox: as neo-nationalist, right-wing, or populist governments rise to power in many countries, central cities—such as Budapest, Warsaw, Istanbul, and Tel-Aviv-Jaffa—are emerging as “islands” of liberal and progressive governance. In this process, cities are increasingly pushing to influence significant policy issues, including refugees, civil rights, energy, and the environment—areas traditionally regarded as the responsibility of the state.

This research focuses on Tel-Aviv-Jaffa as a case study. We hypothesize that the city is home to a large group of academic, intellectual, and cultural elites who hold liberal and often cosmopolitan values. For many years, these groups’ interests aligned with national policies, but today they find themselves as both a demographic and political minority in the country. They feel detached from political power and, equally importantly, alienated from the state’s current official values.

In response, these elites are seeking alternative political avenues. Specifically, they look to the city and its institutions as tools to advance policies that reflect their values and protect their interests. By leveraging local government, they aim to maintain their existing power and re-engage with other social groups and the national government from a more secure institutional position.

Our findings, based on a resident survey, identify and characterize the city’s urban elites and explore their expectations from the local government. This study has broader implications for cities worldwide that find themselves in similar situations—resisting state policies and becoming politically energized in the face of rising neo-nationalist trends at the national level.

Goal

This research identifies and characterizes Tel-Aviv-Jaffa's urban elites and examines how they look to the local government to reflect their values and address their expectations amid a shifting national political landscape.

Researchers

What we did

In progress

Results

Forthcoming

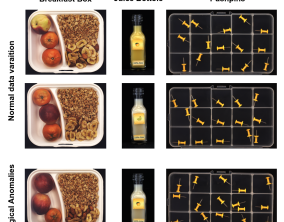

Anomaly detection using set representations and density estimations

Anomaly detection aims to automatically identify samples that exhibit unexpected behavior. We tackle the challenging task of detecting anomalies consisting of an unusual combination of normal elements (`logical anomalies`). For example, consider the case where normal images contain two screws and two nuts but anomalous images may contain one screw and three nuts. We propose to detect logical anomalies using set representations. We score anomalies using density estimation on the set of representations of local elements. Our simple-to-implement approach outperforms the state-of-the-art in image-level logical anomaly detection and sequence-level time series anomaly detection.(nuts or screws) occur in natural images, previous anomaly detection methods relying on anomalous patches would not succeed. Instead, a more holistic understanding of the image is required. You can check out the preprint at: https://arxiv.org/pdf/2302.12245.pdf

Goal

Set Features for Fine-grained Anomaly Detection

Researchers

What we did

Fine-grained anomaly detection has recently been dominated by segmentationbased approaches. These approaches first classify each element of the sample (e.g., image patch) as normal or anomalous and then classify the entire sample as anomalous if it contains anomalous elements. However, such approaches do not extend to scenarios where the anomalies are expressed by an unusual combination of normal elements. We overcome this limitation by proposing set features that model each sample by the distribution of its elements. We compute the anomaly score of each sample using a simple density estimation method. Our simple-to-implement approach1 outperforms the state-of-the-art in image level logical anomaly detection (+3.4%) and sequence-level time series anomaly detection (+2.4%).

Results

Preprint: https://arxiv.org/pdf/2302.12245.pdf

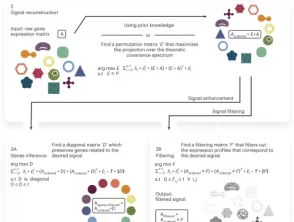

Infer, filter and enhance topological signals in single-cell data using spectral template matching

Goal

Infer, filter and enhance topological signals in single-cell data using spectral template matching

Researchers

- Jonathan Karin

- Yonathan Bornfeld

- Mor Nitzan

What we did

We apply scPrisma to the analysis of the cell cycle in HeLa cells, circadian rhythm and spatial zonation in liver lobules, diurnal cycle in Chlamydomonas and circadian rhythm in the suprachiasmatic nucleus in the brain. scPrisma can be used to distinguish mixed cellular populations by specific characteristics such as cell type and uncover regulatory networks and cell–cell interactions specific to predefined biological signals, such as the circadian rhythm. We show scPrisma’s flexibility in incorporating prior knowledge, inference of topologically informative genes and generalization to additional diverse templates and systems. scPrisma can be used as a stand-alone workflow for signal analysis and as a prior step for downstream single-cell analysis.

Results

https://www.nature.com/articles/s41587-023-01663-5

What do AI Models Know? A Case Study on Visual Question Answering

A major challenge in recent AI literature is understanding why state-of-the-art deep learning models show great success on a range of datasets while they severely degrade in performance when presented with examples which slightly vary from their training distribution. In this proposal, we will examine this question in the context of visual question-answering, a challenging task which requires models to jointly reason over images and text. We will start our exploration with the GQA dataset, which, along with images and text, also includes a rich semantic scene graph, representing the spatial relations between objects in the image, and thus lends itself to probing through high-quality automatic manipulation. In prelminary work we have augmented GQA with examples that vary slightly from the original questions, and shown that here too high-performing models perform much worse on the augmented questions compared to the original ones. Our proposal will analyze our results, exploring the reasons for the drop in performance, and what makes our new questions more challenging. We also plan to to generalize the reasons we find to other datasets of visual question answering, and more broadly to other AI datasets. Given those insights, we will augment the training set with instances that capture model “blind spots”, in an attempt to improve the model’s generalization ability. Our results will improve our understanding of what state-of-the-art AI models know, what they are still missing, and how can we improve them based on this new understanding.

Goal

To achieve a better understanding of the limitations of current state-of-the-art models and datasets, as well as ways to improve them.

Researchers

What we did

In progress

Results

Forthcoming