Anomaly detection using set representations and density estimations

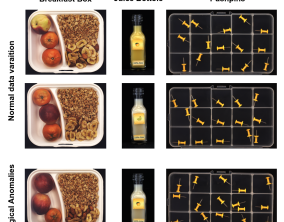

Anomaly detection aims to automatically identify samples that exhibit unexpected behavior. We tackle the challenging task of detecting anomalies consisting of an unusual combination of normal elements (`logical anomalies`). For example, consider the case where normal images contain two screws and two nuts but anomalous images may contain one screw and three nuts. We propose to detect logical anomalies using set representations. We score anomalies using density estimation on the set of representations of local elements. Our simple-to-implement approach outperforms the state-of-the-art in image-level logical anomaly detection and sequence-level time series anomaly detection.(nuts or screws) occur in natural images, previous anomaly detection methods relying on anomalous patches would not succeed. Instead, a more holistic understanding of the image is required. You can check out the preprint at: https://arxiv.org/pdf/2302.12245.pdf

Goal

Set Features for Fine-grained Anomaly Detection

Researchers

What we did

Fine-grained anomaly detection has recently been dominated by segmentationbased approaches. These approaches first classify each element of the sample (e.g., image patch) as normal or anomalous and then classify the entire sample as anomalous if it contains anomalous elements. However, such approaches do not extend to scenarios where the anomalies are expressed by an unusual combination of normal elements. We overcome this limitation by proposing set features that model each sample by the distribution of its elements. We compute the anomaly score of each sample using a simple density estimation method. Our simple-to-implement approach1 outperforms the state-of-the-art in image level logical anomaly detection (+3.4%) and sequence-level time series anomaly detection (+2.4%).

Results

Preprint: https://arxiv.org/pdf/2302.12245.pdf

What do AI Models Know? A Case Study on Visual Question Answering

A major challenge in recent AI literature is understanding why state-of-the-art deep learning models show great success on a range of datasets while they severely degrade in performance when presented with examples which slightly vary from their training distribution. In this proposal, we will examine this question in the context of visual question-answering, a challenging task which requires models to jointly reason over images and text. We will start our exploration with the GQA dataset, which, along with images and text, also includes a rich semantic scene graph, representing the spatial relations between objects in the image, and thus lends itself to probing through high-quality automatic manipulation. In prelminary work we have augmented GQA with examples that vary slightly from the original questions, and shown that here too high-performing models perform much worse on the augmented questions compared to the original ones. Our proposal will analyze our results, exploring the reasons for the drop in performance, and what makes our new questions more challenging. We also plan to to generalize the reasons we find to other datasets of visual question answering, and more broadly to other AI datasets. Given those insights, we will augment the training set with instances that capture model “blind spots”, in an attempt to improve the model’s generalization ability. Our results will improve our understanding of what state-of-the-art AI models know, what they are still missing, and how can we improve them based on this new understanding.

Goal

To achieve a better understanding of the limitations of current state-of-the-art models and datasets, as well as ways to improve them.

Researchers

What we did

In progress

Results

Forthcoming